VMware vCloud Networking and Security (or vCNS for short) was the SDN layer for VMware vCloud Director prior to VMware NSX-V. vCNS provided the network multi-tenancy constructs required to separate customers and operate a public cloud; in simpler words, vCNS provided the networking technology that stopped your Coca-Cola tenant from seeing your Pepsi tenant. In 2016, VMware made the strategic decision to End of Life (EOL) vCNS and migrate customers to NSX-V (aka NSX for vSphere).

It was an excellent strategic decision for VMware but challenging for service providers to implement: while it consolidated the VMware's SDN products, the upgrade process placed a significant burden on service providers. Swapping out an SDN layer is not like an iOS upgrade that interrupts your ability to pay for Starbucks: an SDN upgrade is the virtual equivalent of replacing your physical data centre network. Perhaps even more complex: the virtual world sometimes gives people the rope needed to hang themselves.

I was the Head of Architecture and Operations for a cloud service provider who used the vCloud stack and by extension, vCNS. We were the largest in the country: hundreds of customers, thousands of networks, and an order of magnitude more VMs than the next service provider. Even with an incredible amount of planning and access to the best VMware personnel in the world, this would be a high-touch operation. vCNS to NSX upgrades are a one-way operation with no undo button: in the worst case, messing up could lead to 100% connectivity failure for all customers. This blog post is the story of the journey from vCNS to NSX-V.

tl;dr: We were successful!

When you're running a transformation program of magnitude, it's important to be guided by principles that are relevant to your environment and organisation. Principles influence your decision making and drive the way a programme is designed and projects are executed. After discussion with engineering teams and internal stakeholders, we agreed the programme would run with the following principles:

- At all times, understand and maximise our support position. At any point during the project, we wanted to know our support position with VMware: Were all our products under support and in a supported configuration? What do we need to do to minimise our support risks? It is not enough to simply claim our environment was supported: we needed a document that would prove we were supported.

- Maintain lockstep and transparency with our partners. We wanted to leverage VMware's vast resources, including the VMware Professional Services Organisation (PSO), VMware Global Support Services (GSS), or the local account teams who could instant message the internal product teams.

- Ensure that any changes minimise the fault domain. As a cloud service provider, any changes to the underlying infrastructure have the possibility of impacting multiple customers. When (not if) a mistake is made, we wanted the fault domain (or "blast radius") to be contained to the smallest possible impact.

- Make changes small and easy to roll back. It is preferable to break larger complex changes into a many smaller changes. When a change failed, we wanted engineers to have a quick rollback plan. We understood that failed changes placed engineers under stress and affected their critical thinking.

- Strong change management discipline. We mandated that every change must be documented in a manner that would allow any VMware GSS staffer on duty to understand our environment and the change we performed. To this end, we logged pre-emptive support tickets for all our changes, so any GSS staffers would have advance knowledge of our environment. We mandated that all changes contained the following:

- Pre-change implementation test: To ensure that the environment was operating within normal parameters before implementation.

- Implementation plan: Step-by-step execution with shell commands documented and ready to be copy and pasted. If an engineer discovered a step was missing during the change, it was socially acceptable to abandon the change and retry at a later date.

- Post-change implementation test. This ensured the environment was operating within normal parameters after the implementation.

- Rollback plan. When (not if) a mistake was made, we wanted the engineer to know what to do. We changed our culture to make rollbacks socially acceptable: there was no heroism in trying to fix something on the spot.

- Post-rollback test. To make sure the rollback actually worked.

- Understand that everything is related. In a vCloud environment there are relationships between each of the components: vCD, vCNS/NSX, vCenter, ESXi and SRM. Some of these components need to be strongly coupled to work (vCenter and ESXi), others just need loose alignment (ESXi and SRM).

All in all, this was a change that supported a $100 million business. No pressure! I wrote the vCNS to NSX upgrade plan and engaged VMware Professional Services to verify it. The conversation went along the lines of:

Me to VMware PSO: I've written a 12 step plan. I'd like you to verify.

VMware PSO: This plan is very well researched...can we use it for another customer?

Me: :)

When you have principles, the process can be more important than the end result. This blog post is not the quick way to upgrade vCNS to NSX: it is the principled, service provider-oriented way of upgrading. This post may appear long, but it is a just a summary of a year's worth of engineering transformation...

Underlay network considerations

vCDNI (vCloud Director Network Isolation) is the network multi-tenancy protocol used by vCNS, and VXLAN (Virtual Extensible LAN) is its successor. An important VXLAN requirement is that the end-to-end network underlay between ESXi hosts must have an MTU of 9000. This challenge must be made aware to any team that owns a part of the end-to-end underlay: if you have converged compute infrastructure such as Cisco UCS, you'll need to involve your compute team as well! In my case, both the network and compute staff reported to me. This blog post doesn't go into the process of adjusting MTUs or creating additional networks for NSX.

Interoperability checks

To verify that our upgrades resulted in a configuration that was supported, we used the

VMware Product Interoperability Matrix to perform a 2-way check for each of the 5 products involved. We intentionally chose a 2-way check because we had found a scenario where

Product Team A certified their interoperability with

Product Team B, but not vice versa. I'm not going to name names.

Now let us go on a journey, starting at step zero.

Step Zero. Understand your Current State and Target State

"No matter where you go, there you are." - Buckaroo Banzai

Don't start unless you know where you are. If your environment is a mess, that's ok! That could be the reason you were hired! There are many ESXi audit tools, but they produced a lot of unnecessary information. The nature of vCloud is that you cannot simply upgrade to the latest version: you must upgrade through different versions to get to where you want to go. I summarised the contents of an rvtools output into a single table that summarised 1. where we were, and 2. where we wanted to go. The target versions may seem dated, but they were the latest versions at the time.

(scroll to the right to see the target state)

After an environment audit and analysis, we highlighted some issues.

- There were different versions of ESXi in the environment. Different versions of ESXi made troubleshooting more difficult and decreased supportability.

- vCNS was very close to End of Service Life (EOSL). We contacted VMware so they could seek internal approvals for extended support. We didn't want a GSS staffers to be surprised with our vCNS-related tickets.

- VMware SRM was in use. Though SRM does not integrate with vCD, it did integrate with the several vCenter servers and ESXi hosts that delivered services to customers. Obviously this was not ideal. Thus, any change needed to determine whether the vCenter-SRM and ESXi-SRM support position was impacted.

Step 1. Have consistent version of ESXi in the environment.

Based on our version current state, we decided the best course of action would be to upgrade all ESXi hosts to the same version (not the latest version). This would minimise variation and unpredictable behaviour.

Upgrading ESXi hosts was also a good opportunity for engineers to get experience in the environment with low-risk changes, and to practice writing the documentation required for disciplined change management. It was also a quick win on the board.

Step 2A. Upgrade vCenter from 5.5.0 Update 2B to 6.0 U1.

The vCenter version must always be newer than the ESXi hosts it manages. We decided to upgrade vCenter first, but we faced challenged in selecting the version of vCenter. By consulting the VMware Product Interoperability Matrix for these products (pictured below), it became clear there were two constraints.

- It was not possible to upgrade vCenter past 6.0 U1 without breaking vCD support. Although there were newer versions of vCenter available, 6.0 U1 was the latest version that supported vCD 5.5.4. 6.0 U2 did not support vCD 5.5.4.

- Upgrading vCenter to 6.0 would make SRM temporarily unsupported. This is because vCenter 6.0.0 U1 was not supported with SRM 5.8.1.

To overcome the unsupported vCenter-SRM limitation, we performed a risk analysis and decided to perform this step and the next (an SRM upgrade) on different days of the same weekend. Under normal circumstances, we performed one change per weekend. However, we mitigated these risks by declaring a whole of environment change freeze to reduce the factors that may trigger a site failure.

Interoperability check 2A-1: Verification of upgrade path: 5.5.0 U2B to 6.0 U1 was possible.

Interoperability check 2A-2: Verification that vCD maintained stack interoperability.

Interoperability check 2A-3: Verification that vCNS maintained stack interoperability.

Interoperability check 2A-4: Verification that vCenter maintained stack interoperability.

Interoperability check 2A-5: Verification that ESXi maintained stack interoperability.

Interoperability check 2A-6: Verification that SRM maintained stack interoperability.

The incompatibility between SRM 5.8.1 and vCenter 6.0.0 U1 is acknowledged, hence Step 2B involves upgrading SRM.

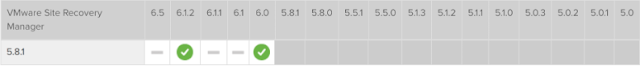

Step 2B. Upgrade SRM from 5.8.1.1 to 6.0.0.1.

Whenever you a planning a VMware upgrade, the questions very quickly becomes "to which version?" The easiest way of determining the solution is to check the Product Interoperability Matrix and see what is possible.

Interoperability check 2B-1: Verification of upgrade path: 2 options possible.

The matrix tells us the following:

- Upgrading SRM 5.8.1.1 to 6.0.0. Possible.

- Upgrading SRM 5.8.1.1 to 6.1.2. Possible, but a further interoperability checks show that 6.1.2 is not compatible with ESXi 6.0 U1. Complexity abounds!

- Upgrading SRM to 6.1, 6.1.1, or 6.5. Not possible.

When performing the bug scrub/release note scrub for 6.0.0, we found a KB article 2111069 that recommended 6.0.0.1 over 6.0.0 due to an issue in the upgrade process. This goes to show that it is important to read the complete release notes for any product under consideration.

After this step, our product stack to the following versions.

For completeness, we performed interoperability checks with the components that SRM integrated with: vCenter, ESXi, and the SRA storage adapters (not pictured). vCloud and vCNS interoperability was not checked, as SRM does not integrate with these components.

Interoperability check 2B-2: Verify that SRM 6.0 worked with the existing version of vCenter.

Interoperability check 2B-3: Verify that SRM 6.0 worked with the existing version of ESXi.

Step 3. Upgrade all ESXi hosts to ESXi 6.0 U1B.

Now that vCenter was upgraded, we could upgrade the ESXi hosts it managed. Although there were versions of ESXi newer than 6.0 U1, the version of ESXi must not exceed the vCenter version (currently 6.0 U1).

Though this was simple, upgrading hundreds of ESXi hosts is a time consuming operation. Good work for more junior staff who want to get experience with lower-risk changes.

As usual, we performed the 6 way interoperability check.

Interoperability check 3-1: Verification of upgrade path.

Interoperability check 3-2: Verification that vCD maintained stack interoperability.

Interoperability check 3-3: Verification that vCNS maintained stack interoperability.

Interoperability check 3-4: Verification that vCenter maintained stack interoperability.

Interoperability check 3-5: Verification that ESXi maintained stack interoperability.

Interoperability check 3-6: Verification that SRM maintained stack interoperability.

Step 4. Upgrade vCloud Director to from 5.5.4 to 5.5.6.

The engineers felt confident this was a minor point upgrade and executed it without issue. It was a good opportunity to write some pre-implementation and post-implementation test scripts for vCD, as well as some observability checks.

There were 4 direct upgrade paths:

- Upgrade vCD 5.5.4 to 5.5.5.

- Upgrade vCD 5.5.4 to 5.5.6.

- Upgrade vCD 5.5.4 to 8.0.

- Upgrade vCD 5.5.4 to 8.0.1

We made a qualitative decision to upgrade to 5.5.6, as our engineers did not have hands on experience to make the jump directly to version 8. It's good for engineers to get their hands dirty on a low-risk changes, and get familiar with the environment: what jump hosts to use, IP addresses of the servers, passwords, script up the post-tests, etc. We could have used the same rationale to justify an upgrade from 5.5.4 to 5.5.5 then 5.5.6, but we agreed as a team to take a small step before taking a large one.

Interoperability check 4-1: Verification of upgrade path.

Interoperability check 4-2: Verification that vCD maintained stack interoperability.

Interoperability check 4-3: Verification that vCNS maintained stack interoperability.

Interoperability check 4-4: Verification that vCenter maintained stack interoperability.

Interoperability check 4-5: Verification that ESXi maintained stack interoperability.

The 6th interoperability check for SRM was not required, as it does not integrate with vCD. After this step was complete, our product versions were as follows.

Step 5. Upgrade vCloud Director from 5.5.6 to 8.0.2.

This is where the fun began and engineer tensions started to rise: vCD is the brains of the service provider platform, and engineers didn't want to work on systems that could have a platform-wide customer-facing impact. This is where our change discipline came into play: so long our change management methodology was strong, our execution would become easier and less risky. By performing an upgrade from vCD 5.5.4 to 5.5.6, our engineers got some familiarity that would be useful during this significant change.

vCloud Director 8-series was a generational change for VMware. We chose vCD 8.0.2 because:

- Upgrading to vCD 8.0.2 allowed vCNS to be upgraded to NSX. Prior to vCD 8.0.2, vCNS could not be upgraded to NSX.

- 8.0.2 was the final version of vCloud to support vCNS. Newer versions of vCloud use NSX only.

The interoperability checks confirmed that our environment was in a supported state post-change.

Interoperability check 5-1: Verification of upgrade path.

Interoperability check 5-2: Verification that vCD maintained stack interoperability.

Interoperability check 5-3: Verification that vCNS maintained stack interoperability.

Interoperability check 5-4: Verification that vCenter maintained stack interoperability.

Interoperability check 5-5: Verification that ESXi maintained stack interoperability.

Again, an SRM check was not necessary as it did not integrate with vCloud.

After the upgrade, the new vCD8 HTML5 user interface brought a lot of joy to our engineers and customers! It was visual confirmation that we were on a path to success. Our version matrix now looked like this.

Step 6A. Upgrade vCNS 5.5.4 to NSX 6.2.5.

This is the part where we swapped the vCloud SDN brains from vCNS to NSX. We have a few options for which NSX version to pick, but the choice becomes clear quite quickly:

- Upgrade from vCNS 5.5.4 to NSX 6.2.5. The baseline option.

- Upgrade from vCNS 5.5.4 to NSX 6.2.7. NSX cannot be upgraded from 6.2.7 to 6.3.1. This is because 6.2.7 was released after 6.3.1, making it a "back in time" upgrade.

After this step, we would finally be rid of vCNS! We'd still have vShield Edges and VCDNI port groups: this would be taken care of in subsequent steps.

Interoperability check 6A-1: Verification of upgrade path.

In this case, the proof was the upgrade bundle: VMware-vShield-Manager-upgrade-bundle-toNSX-6.2.5-4818372.tar.gz (build 4818372, release date 2017-01-05)

Interoperability check 6A-2: Verification that vCD maintained stack interoperability.

Interoperability check 6A-3: Verification that NSX maintained stack interoperability.

Interoperability check 6A-4: Verification that vCenter maintained stack interoperability.

Interoperability check 6A-5: Verification that ESXi maintained stack interoperability.

vShield Edges are the VNFs (virtual network functions) that separate customers from each other and the outside world. Edge redeployments don't change the underlying platform. As the fault domain was limited to the customer, this was a matter of contacting each customer to find their preferred network maintenance window time.

The NSX Edges still support vCDNI, so there is no need to change network protocols in this step. However, this step must be performed as support for vCNS Edges is removed in NSX 6.3.0.

Step 6C. Change network from VCDNI to VXLAN.

This was the rubicon. Once you pressed the

Migrate to VXLAN button, there was no return, no undo button. It was a destructive one-way operation. Tom Fojta's blog

documents what happens when you press the button.

- A "dummy" VXLAN logical switch is created.

- All VMs connected to the VCDNI network are reconnected to the new VXLAN logical switch

- Edge Gateways connected to the VCDNI network are connected to the new VXLAN logical switch.

- Org VDC/vApp network backing is changed in vCloud DB to use the new VXLAN logical switch.

- Original VCDNI port group is deleted.

All in all, 99% of our Org VDC/vApps cut across perfectly. We encountered some edge (excuse the pun) cases where network traffic was unresponsive, but this was solved with an edge redeploy.

Step 7. Upgrade vCenter 6.0 U1 to U2.

After a vCNS to NSX-V upgrade, this was a piece of cake so long as you avoid the temptation to upgrade to the latest and greatest vCenter 6.0 U3. This would have resulted in upgrading to a dead end: vCenter 6.0 U3 was released 6 months after 6.5, and is intended for customers staying on the 6.0 release stream. Upgrading from 6.0 U3 to 6.5 is considered a "back in time" upgrade and is impossible.

Our interoperability matrix checks verified that the environment would be in a 100% supported state after the upgrade.

Interoperability check 7-1: Verification of upgrade path.

Interoperability check 7-2: Verification that vCD maintained stack interoperability.

Interoperability check 7-3: Verification that NSX maintained stack interoperability.

Interoperability check 7-4: Verification that vCenter maintained stack interoperability.

Interoperability check 7-5: Verification that ESXi maintained stack interoperability.

Interoperability check 7-6: Verification that SRM maintained stack interoperability.

As vCenter integrates with SRM, an interoperability check was required.

After the upgrade, the version matrix looked like this.

Step 8. Upgrade ESXi 6.0 U1 to U2.

After vCenter was upgraded, we performed the corresponding ESXi upgrade. At this point it is not possible to upgrade directly to vCloud 8.2, as this requires ESXi 6.0 U2. At this point, our engineers were getting used to the cycle of change.

The usual interoperability checks verified that we would be in a support state, post-change.

Interoperability check 8-1: Verification of upgrade path.

Interoperability check 8-2: Verification that vCD maintained stack interoperability.

Interoperability check 8-3: Verification that vCNS maintained stack interoperability.

Interoperability check 8-4: Verification that vCenter maintained stack interoperability.

Interoperability check 8-5: Verification that ESXi maintained stack interoperability.

Interoperability check 8-6: Verification that SRM maintained stack interoperability.

After the upgrade, our versions were:

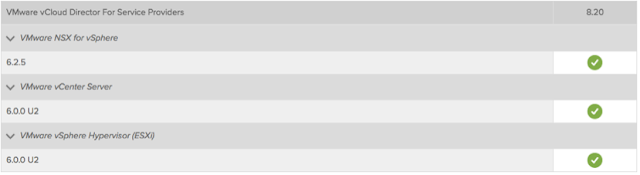

Step 9. Upgrade vCloud Director from 8.0.2 to 8.20.

This step upgraded us to the latest publicly available version of vCD (at the time). We won!

The usual interoperability checks verified that we were supported. By this time, my engineers started to demand that their colleagues performed interoperability checks on their non-VMware upgrades. They recognised the value of being able to prove that you had a supported configuration. It's the journey that changes you, not the destination.

Interoperability check 9-1: Verification of upgrade path.

Interoperability check 9-2: Verification that vCD maintained stack interoperability.

Interoperability check 9-3: Verification that NSX maintained stack interoperability.

Interoperability check 9-4: Verification that vCenter maintained stack interoperability.

Interoperability check 9-5: Verification that ESXi maintained stack interoperability.

As this was a vCD upgrade, no SRM interoperability check was necessary.

After this change, we were a step closer to a fully upgraded VMware stack.

Step 10. Upgrade NSX from 6.2.5 to 6.3.1.

This step upgraded us to the latest publicly available version of NSX (at the time). Another big win for the engineering team! This release of NSX 6.3 removed support for vShield Edges, which we had long ago replaced with NSX Edges.

Though interoperability checks are tedious, they allow us to prove to any VMware GSS staffer that we are in a 100% supported configuration.

Interoperability check 10-1: Verification of upgrade path.

Interoperability check 10-2: Verification that vCD maintained stack interoperability.

Interoperability check 10-3: Verification that NSX maintained stack interoperability.

Interoperability check 10-4: Verification that vCenter maintained stack interoperability.

Interoperability check 10-5: Verification that ESXi maintained stack interoperability.

As this was an NSX upgrade, no SRM check was required.

Step 11A. Upgrade vCenter from 6.0 U2 to 6.5.0.

This step upgraded us to the latest publicly available version of vCenter (at the time).

When we performed the interoperability check, there were some caveats that were revealed for NSX.

Interoperability check 11A-1: Verification of upgrade path.

Interoperability check 11A-2: Verification that vCD maintained stack interoperability.

Interoperability check 11A-3: Verification that NSX maintained stack interoperability.

Note: VMware vSphere 6.5a is the minimum supported version with NSX for vSphere 6.3.0 (KB 2148841).

Interoperability check 11A-4: Verification that vCenter maintained stack interoperability.

Note: VMware vSphere 6.5a is the minimum supported version with NSX for vSphere 6.3.0 (KB 2148841).

Interoperability check 11A-5: Verification that ESXi maintained stack interoperability.

Interoperability check 11A-6: Verification that SRM maintained stack interoperability.

We acknowledged that interoperability with SRM would break, so we upgraded SRM in the next step.

After this step was complete, our versions looked like this.

Step 11B. Upgrade SRM from 6.0.0.1 to 6.1.1.

Upgrading to SRM 6.1.1 was the next optimal step, however this only became apparent after investigating the other courses of action.

- vCenter 6.5 does not support SRM 6.0.0.1. SRM will not function properly until this upgrade step is complete.

- It is not possible to upgrade from SRM 6.0.0.1 to 6.5 directly. You must upgrade through 6.1.1 or 6.1.2.

- Do not upgrade to 6.1.2. This was released after SRM 6.5 and is intended for customers staying on the 6.1 release train (a "back in time" release). It is not possible to upgrade from 6.1.2 to 6.5.

Interoperability checks showed that we would be supported after this change was complete.

Interoperability check 11B-1. Verification of upgrade path.

Interoperability check 11B-2. Verification of interoperability with vCenter.

Interoperability check 11B-3. Verification of interoperability with ESXi.

This took us to the following versions.

Step 11C. Upgrade SRM from 6.1.1 to 6.5.

This step upgraded us to the latest publicly available version of SRM (at the time).

Our interoperability checks verified that we would be supported after the change was complete.

Interoperability check 11C-1. Verification of upgrade path.

Interoperability check 11C-2. Verification of interoperability with vCenter.

Interoperability check 11C-3. Verification of interoperability with ESXi.

After the upgrade, our versions were:

Step 12. Upgrade ESXi 6.0 U2 to ESXi 6.5.

This step upgraded us to the latest publicly available version of ESXi (at the time).

Our final interoperability check would confirm that our environment was on the latest version of all VMware components and was 100% supported!

Interoperability check 12-1: Verification of upgrade path.

Interoperability check 12-2: Verification that vCD maintained stack interoperability.

Interoperability check 12-3: Verification that NSX maintained stack interoperability.

Interoperability check 12-4: Verification that vCenter maintained stack interoperability.

Interoperability check 12-5: Verification that ESXi maintained stack interoperability.

Interoperability check 12-6: Verification that SRM maintained stack interoperability.

So at the end of this journey, our VMware stack versions look like this.

Step 13. Upgrade all VMware Tools instances.

Just kidding, nobody does this.

It's the journey, not the destination.

And thus ended the journey of a vCNS to NSX-V upgrade. In the end it took several thousand engineering hours effort, but we got to where we needed to be: the beginning of the new VMware upgrade cycle. Though the planning was important, it was the principles that drove the planning. This plan cannot be reused for NSX-T but the principles and methodology can be.